A Playful Game Changer: Fostering Student Retention in Online Education with Social Gamification

Markus Krause, Marc Mogalle, Henning Pohl, Joseph Jay Williams

L@S ’15

page 95-102

ACM

(New York, NY, USA)

2015

Many MOOCs report high drop off rates for their students. Among the factors reportedly contributing to this picture are lack of motivation, feelings of isolation, and lack of interactivity in MOOCs. This paper investigates the potential of gamification with social game elements for increasing retention and learning success. Students in our experiment showed a significant increase of 25% in retention period (videos watched) and 23% higher average scores when the course interface was gamified. Social game elements amplify this effect significantly – students in this condition showed an increase of 50% in retention period and 40% higher average test scores.

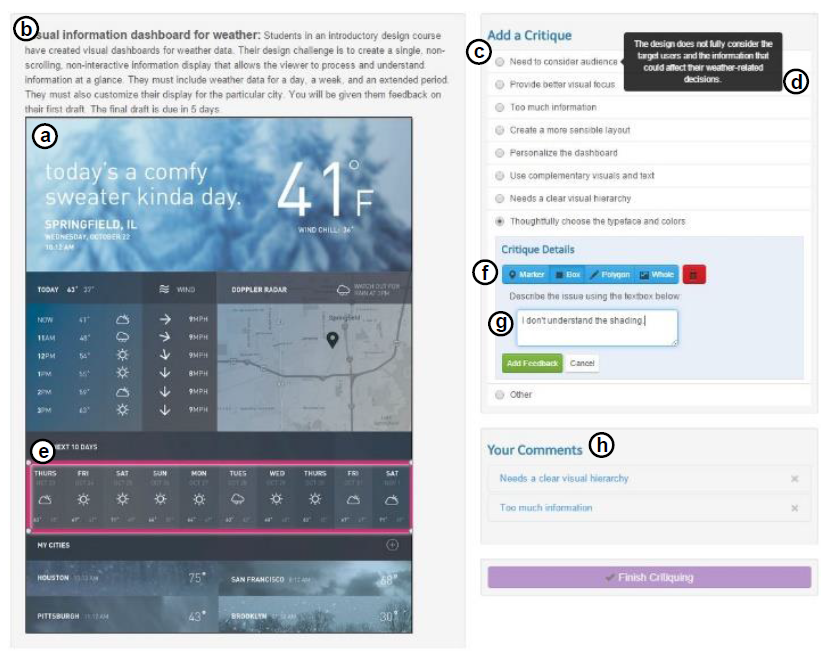

Almost an Expert: The Effects of Rubrics and Expertise on Perceived Value of Crowdsourced Design Critiques

Alvin Yuan, Kurt Luther, Markus Krause, Sophie Isabel Vennix, Steven P Dow, Bjorn Hartmann

CSCW ’16

page 1005-1017

ACM

(New York, NY, USA)

2016

Expert feedback is valuable but hard to obtain for many designers. Online crowds can provide fast and affordable feedback, but workers may lack relevant domain knowledge and experience. Can expert rubrics address this issue and help novices provide expert-level feedback? To evaluate this, we conducted an experiment with a 2x2 factorial design. Student designers received feedback on a visual design from both experts and novices, who produced feedback using either an expert rubric or no rubric. We found that rubrics helped novice workers provide feedback that was rated nearly as valuable as expert feedback. A follow-up analysis on writing style showed that student designers found feedback most helpful when it was emotionally positive and specific, and that a rubric increased the occurrence of these characteristics in feedback. The analysis also found that expertise correlated with longer critiques, but not the other favorable characteristics. An informal evaluation indicates that experts may instead have produced value by providing clearer justifications.

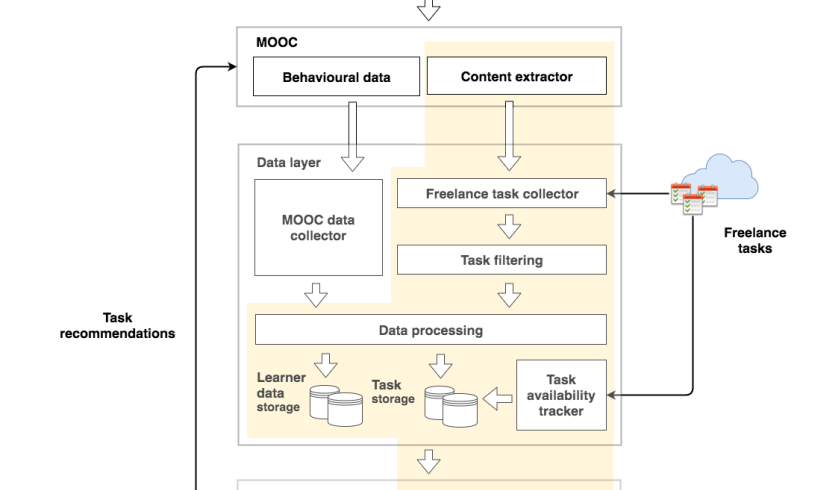

Buying Time: Enabling Learners to Become Earners with a Real-world Paid Task Recommender System

Guanliang Chen, Dan Davis, Markus Krause, Claudia Hauff, Geert-Jan Houben

LAK ’17

page 578-579

ACM

(New York, NY, USA)

2017

Massive Open Online Courses (MOOCs) aim to educate the world, especially learners from developing countries. While MOOCs are certainly available to the masses, they are not yet fully accessible. Although all course content is just clicks away, deeply engaging with a MOOC requires a substantial time commitment, which frequently becomes a barrier to success. To mitigate the time required to learn from a MOOC, we here introduce a design that enables learners to earn money by applying what they learn in the course to real-world marketplace tasks. We present a Paid Task Recommender System (Rec-\$ys), which automatically recommends course-relevant tasks to learners as drawn from online freelance platforms. Rec-\$ys has been deployed into a data analysis MOOC and is currently under evaluation.

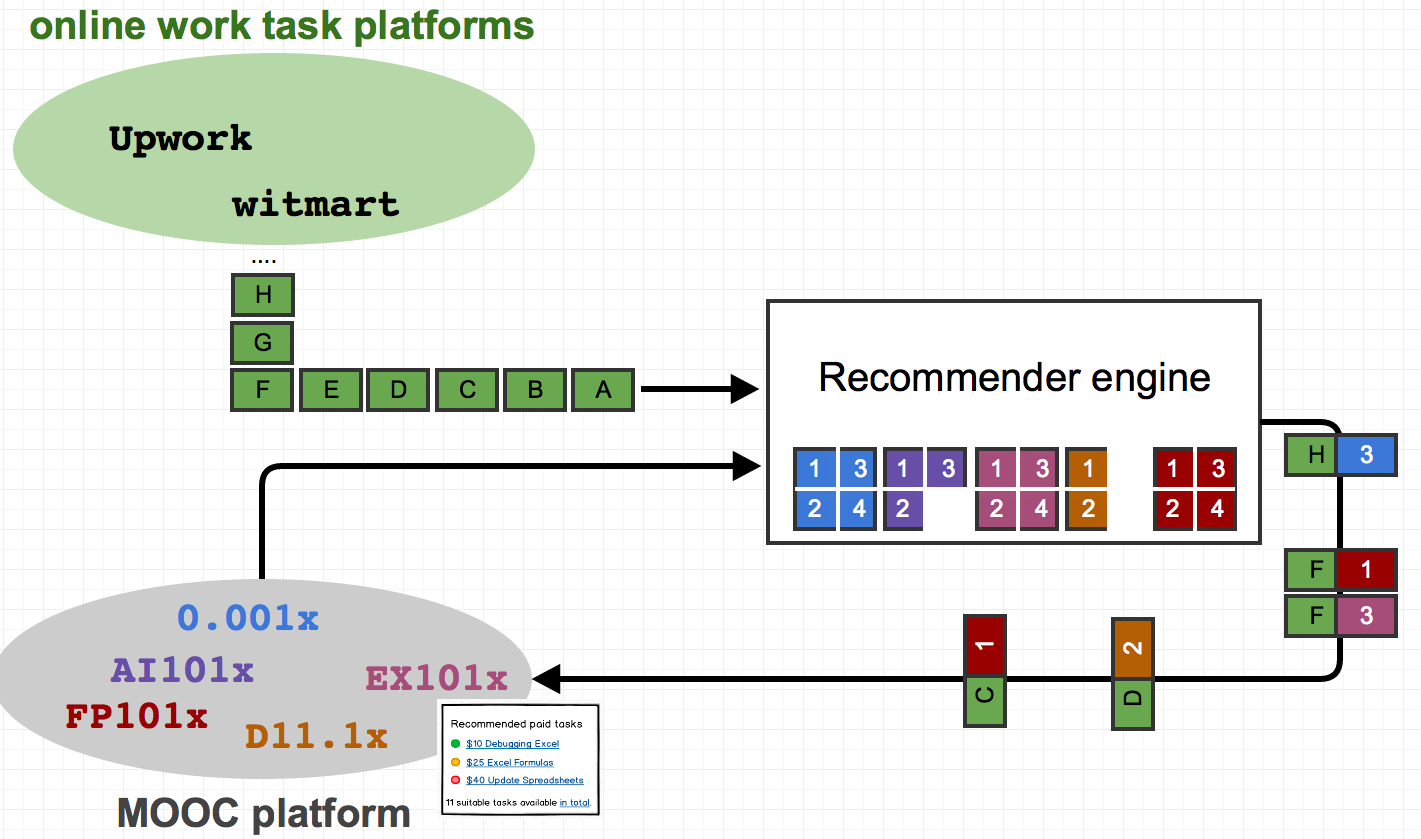

Can Learners be Earners? Investigating a Design to Enable MOOC Learners to Apply their Skills and Earn Money in an Online Market Place

G. Chen, D. Davis, M. Krause, E. Aivaloglou, C. Hauff, G. J. Houben

IEEE Transactions on Learning Technologies

page 1-1

2016

Massive Open Online Courses (MOOCs) aim to educate the world. More often than not, however, MOOCs fall short of this goal a majority of learners are already highly educated (with a Bachelor degree or more) and come from specific parts of the (developed) world. Learners from developing countries without a higher degree are underrepresented, though desired, in MOOCs. One reason for those learners to drop out of a course can be found in their financial realities and the subsequent limited amount of time they can dedicate to a course besides earning a living. If we could pay learners to take a MOOC, this hurdle would largely disappear. With MOOCS, this leads to the following fundamental challenge: How can learners be paid at scale? Ultimately, we envision a recommendation engine that recommends tasks from online market places such as Upwork or witmart to learners, that are relevant to the course content of the MOOC. In this manner, the learners learn and earn money. To investigate the feasibility of this vision, in this paper we explored to what extent (1) online market places contain tasks relevant to a specific MOOC, and (2) learners are able to solve real-world tasks correctly and with sufficient quality. Finally, based on our experimental design, we were also able to investigate the impact of real-world bonus tasks in a MOOC on the general learner population.

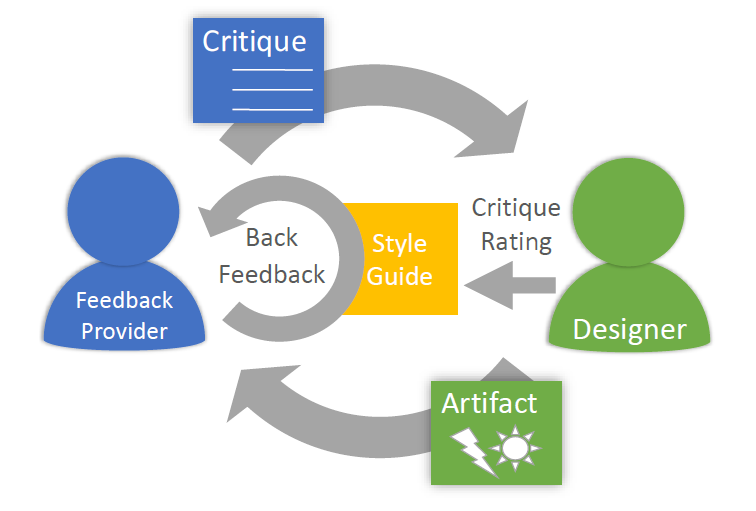

Critique Style Guide: Improving Crowdsourced Design Feedback with a Natural Language Model

Markus Krause, Tom Garncarz, JiaoJiao Song, Elizabeth M. Gerber, Brian P. Bailey, Steven P. Dow

CHI ’17

page 4627-4639

ACM

(New York, NY, USA)

2017

Designers are increasingly leveraging online crowds; yet, online contributors may lack the expertise, context, and sensitivity to provide effective critique. Rubrics help feedback providers but require domain experts to write them and may not generalize across design domains. This paper introduces and tests a novel semi-automated method to support feedback providers by analyzing feedback language. In our first study, 52 students from two design courses created design solutions and received feedback from 176 online providers. Instructors, students, and crowd contributors rated the helpfulness of each feedback response. From this data, an algorithm extracted a set of natural language features (e.g., specificity, sentiment etc.) that correlated with the ratings. The features accurately predicted the ratings and remained stable across different raters and design solutions. Based on these features, we produced a critique style guide with feedback examples - automatically selected for each feature - to help providers revise their feedback through self-assessment. In a second study, we tested the validity of the guide through a between-subjects experiment (n=50). Providers wrote feedback on design solutions with or without the guide. Providers generated feedback with higher perceived helpfulness when using our style-based guidance.

Mooqita: Empowering Hidden Talents with a Novel Work-Learn Model

Markus Krause, Doris Schiöberg, Jan David Smeddinck

CHI eA ’18

page 141-1410

ACM

(New York, NY, USA)

2018

We present a case study of Mooqita, a platform to support learners in online courses by enabling them to earn money, gather real job task experiences, and build a meaningful portfolio. This includes placing optional additional assignments in online courses. Learners solve these individual assignments, provide peer reviews for other learners, and give feedback on each review they receive. Based on these data points teams are selected to work on a final paid assignment. Companies offer these assignments and in return receive interview recommendations from the pool of learners together with solutions for their challenges. We report the results of a pilot deployment in an online programming course offered by UC BerkeleyX. Six learners out of 158 participants were selected for the paid group assignment paying \$600 per person. Four of these six were invited for interviews at the participating companies Crowdbotics (2) and Telefonica Innovation Alpha (2).